Track all markets on TradingView

BREAKING NEWS

- Redmi 13 5G Model Numbers Surface Online; Could Debut in India as Poco M7 Pro 5G

- Redmi Note 13 5G Series HyperOS Update Based on Android 14 Begins Rolling Out in India

- Xiaomi 14 Series ‘AI Treasure Chest’ With Several AI Tools in Testing, Could Debut This Year: Report

- Redmi Pad SE With 11-Inch Display, Snapdragon 680 Chipset and Android 13-Based MIUI Pad 14 Launched in India

- Xiaomi Tipped to Launch First Snapdragon 8 Gen 4-Powered Smartphone Ahead of OnePlus, iQoo

- CNA Explains: TikTok could be banned in the US. What would that mean for the rest of the world?

- Commentary: Even if TikTok is banned in the US, its users will find alternatives

- Trump’s three US Supreme Court appointees thrash out immunity claim

- HYBE, the agency behind BTS, files complaint against subsidiary head Min Hee-jin over breach of trust

- ‘Negative’ factors building in US-China ties, foreign minister Wang tells Blinken

Latest Stories

Tech & Gadgets

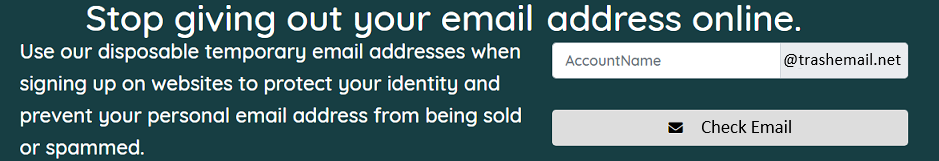

Tired of getting those mysterious password reset emails? Here’s what to do about it

Passwords can definitely be a frustrating part of our lives. Remembering which passwords you used for your dozens of different accounts is…

Read More...

Read More...

Fox News AI Newsletter: AI predicts your politics with single photo

The study used AI to predict people's political orientation based on images of expressionless faces. (American Psychologist)Welcome…

Read More...

Read More...

This off-road teardrop trailer adds luxury camping to the most remote locations

Are you looking for a camper that breaks away from the conventional teardrop design and blends functionality with sleek aesthetics? Meet the…

Read More...

Read More...

The AI camera stripping away privacy in the blink of an eye

It's natural to be leery regarding the ways in which people may use artificial intelligence to cause problems for society in the near…

Read More...

Read More...

11 insider tricks for the tech you use every day

If you're the person skipping updates on your devices … knock that off. You're missing out on important security enhancements—like iOS 17.4,…

Read More...

Read More...

- Advertisement -